extreme efficiency

UX Design, Artificial Intelligence

March 2018 Individual Project

Timeline: 5 weeks

Tools: Rapid Prototype, Machine Learning(GRT toolkit), Unity3D, Kinect, OpenFrameworks

A playful AI prototype that maintains traffic efficiency by measuring pedestrian emotion.

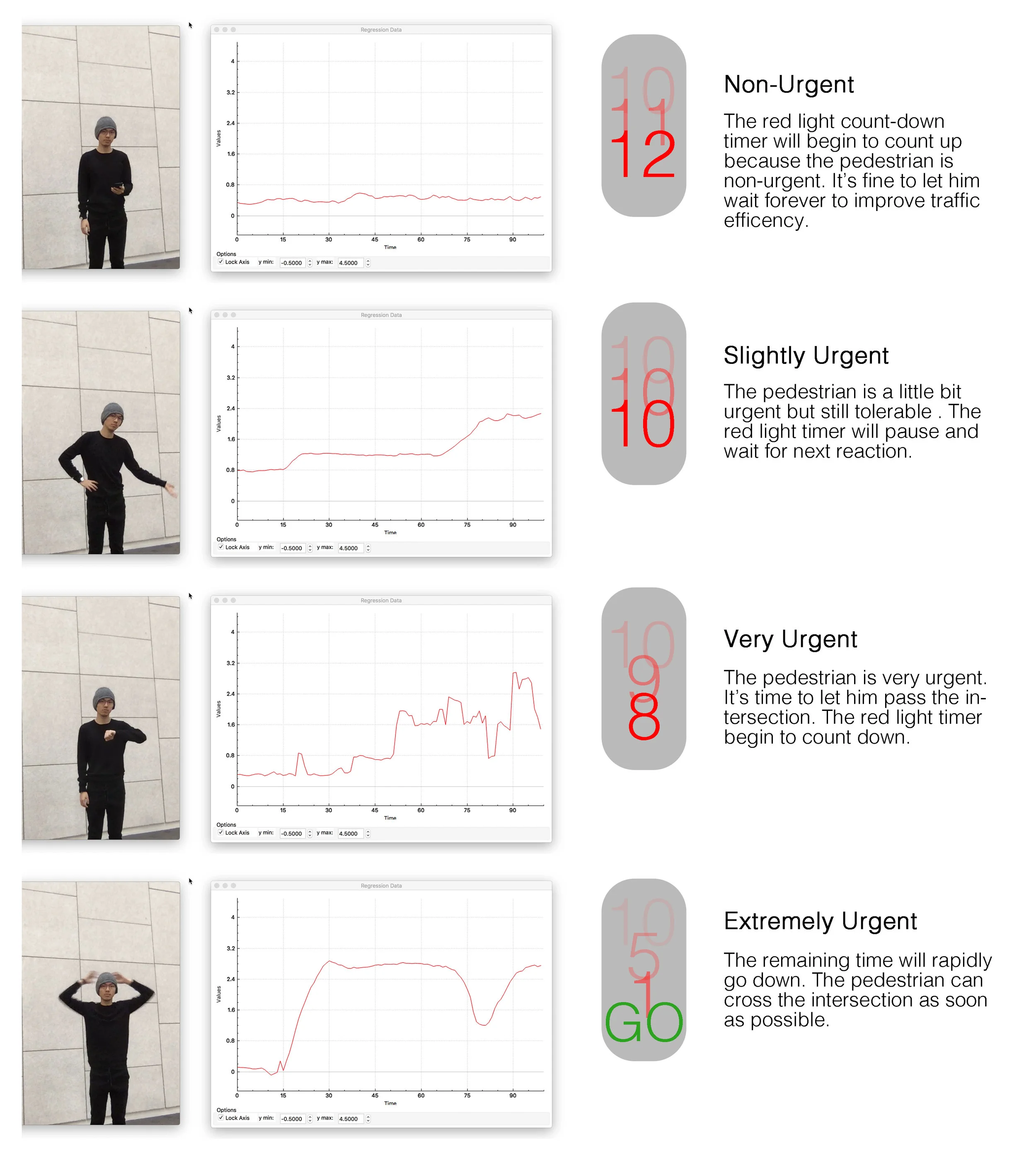

This is an AI traffic system that can measure the level of urgency of pedestrian. This system uses camera to capture your body movement and extracts motion feature data to machine learning model for analysis. The aggressive movements reflect high urgency level and non-aggressive movements reflect low urgency level. To maximum the traffic efficiency, the non-urgent pedestrian will wait longer to cross the street while urgent pedestrian will wait shorter.

This experiment reveals the potential conflict between AI system and common value of society. For this AI system, it performs well in improving the traffic efficiency. But in the meanwhile, it hurt our common values of society. There is no wrong side in this case. But there are different standpoint. When future is ruled by AI, what should we do to adapt ourselves to this new nature?

Anyway, enjoy the law of AI nature. Be patient.

Design Process Overview

——————————————————————————————————————————————————————————————————————————————

I use Gesture Recognition Toolkit (GRT) to build the machine learning module. For this case, I need continuous urgency level output based on the the multiple body gesture data input. So I choose the regression mode with Artificial Neural Network (ANN) training method. I also did extra coding trick on Google Colab via TensorFlow to help improve performance.

Kinect, OpenFramework, Processing

The first part of this project is recognize people’s movement in real time. I use Kinect and Open Framework API along with processing to make sure the whole movement recognition system works perfectly on MacOS platform and have OSC output functions. The process was very tough and arduous due to the old Kinect API. Fortunately I made it.

After I connected the whole system to machine learning system. The first training test is based on classification mode which can simply confirm if the parameters I collect from Kinect can represent different body gestures. Following the first training test, the second training test use regression mode with Artificial Neural Network (ANN) training method. I personally train the model with some body gestures input and different values output to find out what is the best input parameters and number of layers of ANN model.

First training and testing in classification mode

Further training and testing in ANN mode for urgency recognition

.

.

I invite a couple of participants to train the final model and connect the result to a simple traffic light program to test out my idea.

The final prototype is made in Unity3D which allow me to rapidly simulate the situation. The system still demonstrates the concept: 1. Pedestrian will block the street and create temporary traffic jam. 2. The urgency recognition AI system can maximize the efficiency of traffic.

AI traffic light simulation are developed in Unity3D with urgency recognition AI system

Final installation is a live interactive system which allows people to experience this idea personally.